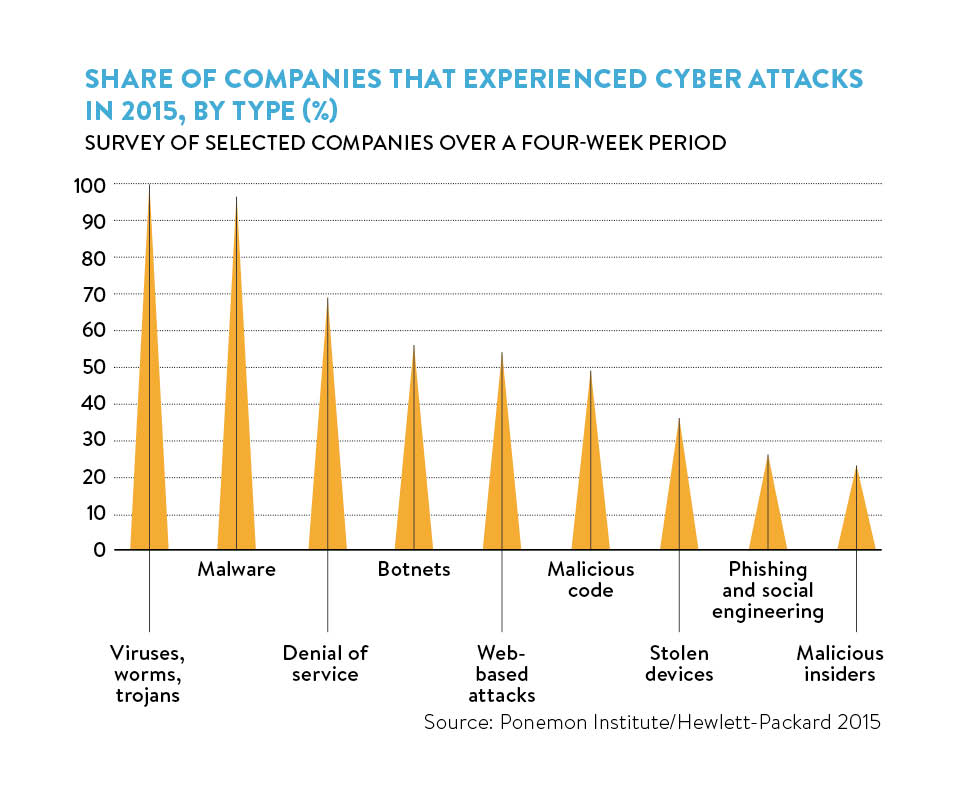

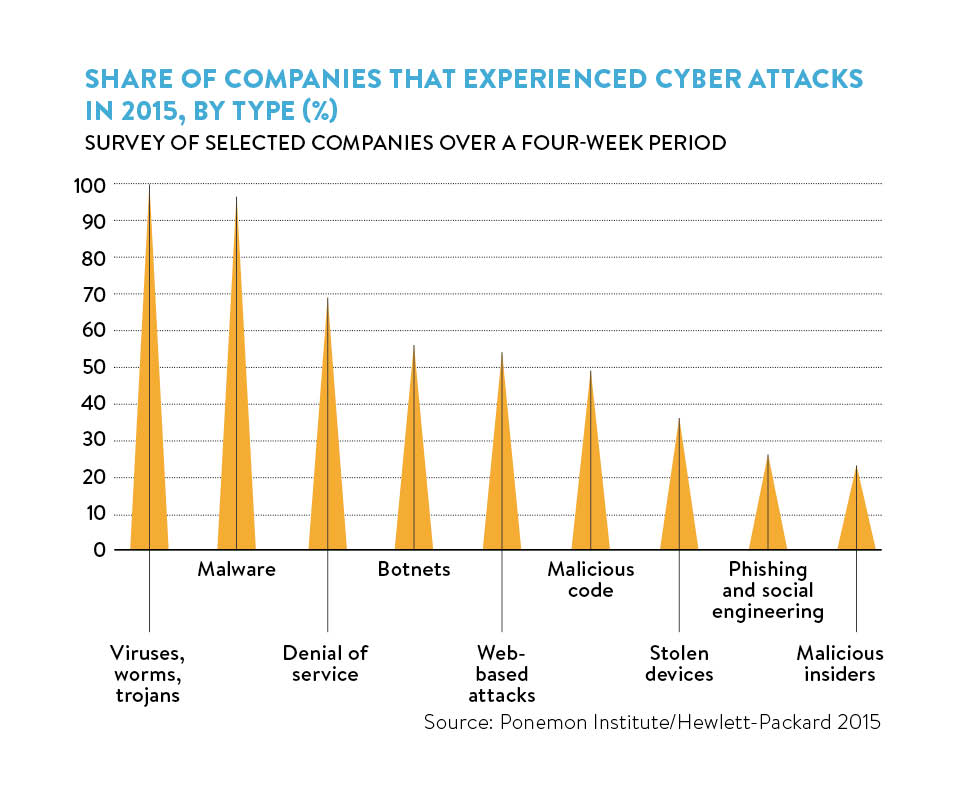

The EMC global Data Protection Index revealed 22 per cent of UK businesses have suffered data loss in the last year. This comes at an estimated average cost of £920,000 to breached organisations. Cyber crime will likely cost the global economy £335 billion this year alone, according to the World Economic Forum’s Global Risks Report 2016.

Meanwhile, the Hamilton Place Strategies’ report, Cybercrime Costs More Than You Think, estimates the median cost of cyber crime has increased by nearly 200 per cent in the last five years and is likely to continue growing, while Juniper Research predicts the overall cost of data breaches will rise by £1.58 trillion up to 2019.

It’s obvious the bad guys are winning and something needs to change. That change could well come in the shape of solutions driven by artificial intelligence or AI.

Playing catch-up

What do security experts actually mean when they talk about AI? “In the context of cyber security solutions, artificial intelligence means the use of machine-learning techniques to enable computers to learn from the data in a similar way to humans,” says Eldar Tuvey, co-founder and chief executive at mobile security vendor Wandera.

Machine learning as applied to computer security focuses on prediction based on thousands of properties learnt from earlier data

A formal branch of AI and computational learning theory, machine learning focuses on building systems that learn directly from the data they are fed, so they effectively program themselves in order to make predictions.

Industry verticals, such as healthcare, insurance, finance and high-frequency trading, have applied machine-learning principles to analyse large volumes of data and drive autonomous decision-making. Now cyber security is catching up.

“Machine learning as applied to computer security focuses on prediction based on thousands of properties learnt from earlier data, whereas current techniques, such as signatures, heuristics and behaviour-monitoring, rely on simplistic, easily evaded data points,” says Lloyd Webb, director of sales engineering in Europe, the Middle East and Africa with AI security vendor Cylance.

The key differentiator of this technology is that both old, previously known attacks, as well as new, previously unknown attacks, including those not yet written or conceived, are detectable. “This is the power of predictive machine-learning technologies to predict the future,” says Mr Webb.

Neither he nor Cylance are alone in this belief. Guy Caspi, chief executive at DeepInstinct, is also a deep-learning evangelist. “Deep neural networks are the first family of algorithms within machine learning that do not require manual feature engineering,” he explains. “Instead they learn on their own to identify the object on which they are trained by processing and learning the high-level features from raw data.”

When applied to cyber security, the deep-learning core engine is trained to learn, without any human intervention, whether a file is malicious or legitimate. “The result of this independent learning is highly accurate detection – over 99.9 per cent of both substantial and slightly modified malicious code,” says Mr Caspi.

Martin Borrett, an IBM distinguished engineer and chief technology officer of IBM Security Europe, thinks the IBM Watson system shows just what these AI-driven solutions are capable of. Watson is a cognitive computing system that learns at scale, reasons with purpose and interacts with humans naturally. It is taught, not programmed, and understands not only the language of security, but the context in which it sits.

“Watson will learn to understand the context and connection between things like a security campaign, threat actor, target and incident,” Mr Borrett says. “This leads to cognitive systems not only understanding the language and connections, but learning from them and offering knowledge and suggested defence actions to security professionals.”

[embed_related]

The perfect storm

It all sounds pretty straightforward, so why has it taken so long to come to the rescue of businesses under attack from the cyber-crime threat? The truth is that AI-driven solutions have had to wait for a perfect storm of four technological advances all blowing together.

“The most powerful results are likely to come where cheap high-speed processing capacity, data analytics that enables inferences to be drawn even from unstructured data, neural machine learning and the availability of large data sets of relevant digitised information to work on can be harnessed together,” says Professor Sir David Omand, former director of GCHQ (Government Communications Headquarters) and now senior adviser to Paladin Capital.

He thinks this puts GCHQ in a good position at the eye of the AI storm. “Ninety five per cent of the cyber attacks on the UK detected by the intelligence community in the last six months came from the collection and analysis of bulk data,” he says.

“Right now GCHQ is monitoring cyber threats from high-end adversaries against 450 companies across the UK aerospace, defence, energy, water, finance, transport and telecoms sectors.”

If malware, for example, can be detected with the assistance of AI at warp speed, then it may be possible to block the attack, including the deployment of active defences and the ability to reconfigure ICT systems in the light of an attack. “Just like the human immune system in the face of a hostile virus,” says Sir David.

Martin Sweeney, chief executive at Ravelin, which is a UK specialist combining machine learning with graphing networks and behavioural analytics to help businesses with fraud detection security, sees AI-driven solutions as our immediate future.

“Current automated systems, in fraud at least, fall down because they try to mirror human decisioning – if X transaction originated in Y location with Z purchase price then decline,” he explains. “These rules-based approaches require constant gardening and fail to allow the machines themselves to learn.”

Mr Sweeney is sure that in the coming months the AI models will improve and the detection rates along with them. The main change, he insists, will be “the comfort that merchants feel in moving their fraud management from a largely human process to a largely automated one”.

HYBRID AI: UNLEASHING THE SECURITY CYBORG

How important is it for artificial intelligence (AI) and humans to work together to monitor cyber risk, and can such a hybrid approach provide better results than either humans or AI alone?

“In practical terms, AI eliminates human errors,” says Cylance’s Lloyd Webb. “Machines don’t get tired, they don’t need tea or smoke breaks, they tirelessly continue to operate at levels of scale and performance that will always outclass human beings.”

As savage as that sounds, he has a point; humans have neither the brainpower nor the physical endurance to keep up with the overwhelming volume and sophistication of modern threats.

Yet, for now at least, most security industry experts agree that we cannot eliminate humans from the AI security spectrum. For a start, as Dr Kevin Curran, reader in computer science at Ulster University, points out: “Most practical AI-driven cyber security approaches are a hybrid approach. IT managers must prepare their IT ecosystems for machine learning by capturing, aggregating and normalising relevant data beforehand.”

That’s before the machines have even been allowed near the data, let alone produced their threat predictions. Once you reach that stage, there’s the critical role of humans in determining how accurate the analysis has been.

As Chemring Technology’s Daniel Driver concludes: “The AI component provides the support, analogous to the productivity of a team over an individual, offering indications and suggestions which can be accepted or rejected by the user.”

It is likely, he adds, that a collaborative approach will be taken in the foreseeable future between artificial intelligence and humans with the AI component effectively becoming one of the team.

Playing catch-up