At what point does a factory worker lose capacity because of a co-bot? What if a logistics artificial intelligence (AI) system is fooled by new data and makes a fatal error? What if board executives are duped by hostile chatbots and act on misinformation?

None of these instances of bot-gone-bad scenarios are science fiction fantasy. Don’t forget the Tesla driverless car that mistook a trailer for the sky, the racist Microsoft bot that learnt from bad examples and the chatbots that influenced the 2016 US presidential election. With 45 per cent of jobs forecast to be AI augmented by 2025, according to Oxford University research, alarmingly, policing the robots remains an afterthought.

We need to build and engineer AI systems to be safe, reliable and ethical – up to now that has not happened

Alan Winfield, the only professor of robot ethics in the world, identifies the problem of technologies being introduced rapidly and incrementally, with ethics playing catch-up. “We need to build and engineer AI systems to be safe, reliable and ethical – up to now that has not happened,” he says. Professor Winfield is optimistic about workforce augmentation and proposes a black box with investigatory powers in the event of an AI catastrophe.

But not all AI is easy to police with some varieties more traceable than others, says Nils Lenke, board member of the German Research Institute for Artificial Intelligence, the world’s largest AI centre. Unlike traditional rules-based AI, which provides “intelligent” answers based on an ability to crunch mathematical formulae, learning algorithms based on neural networks can be opaque and impossible to reverse engineer, he explains.

Neural networks are self-organising in the quest to find patterns in unstructured data. The drawback, says Dr Lenke, is that it is impossible to say which neuron fired off another in a system composed of hundreds of thousands of connections learning from thousands of examples. “When an error occurs, it’s hard to trace it back to the designer, the owner or even the trainer of the system, who may have fed it erroneous examples,” he says.

Governments are beginning to tackle the complexities of policing AI and to address issues of traceability. The European Union General Data Protection Regulation, which comes into force in May 2018, will mandate that companies are able to explain how they reach algorithmic-based decisions. Earlier this year, the EU voted to legislate around non-traceable AI, including a proposal for an insurance system to cover shared liability by all parties.

More work is needed to create AI accountability, however, says Bertrand Liard, partner at global law firm White & Case, who predicts proving liability will get more difficult as technology advances faster than the law. “With [Google’s] DeepMind now creating an AI capable of imagination, businesses will soon face the challenge of whether AI can own or infringe intellectual property rights,” says Mr Liard.

In the meantime, an existing ethical gap that needs fixing now is the lack of regulation requiring companies to declare their use of bots or AI. If a chatbot gives a reasonable response online, there’s a natural assumption that we are communicating with a fellow human being. “Without an explicit warning, as recipients we have no opportunity to evaluate them and can become overwhelmed,” says Dr Lenke, who is also senior director of corporate research at Nuance Communications.

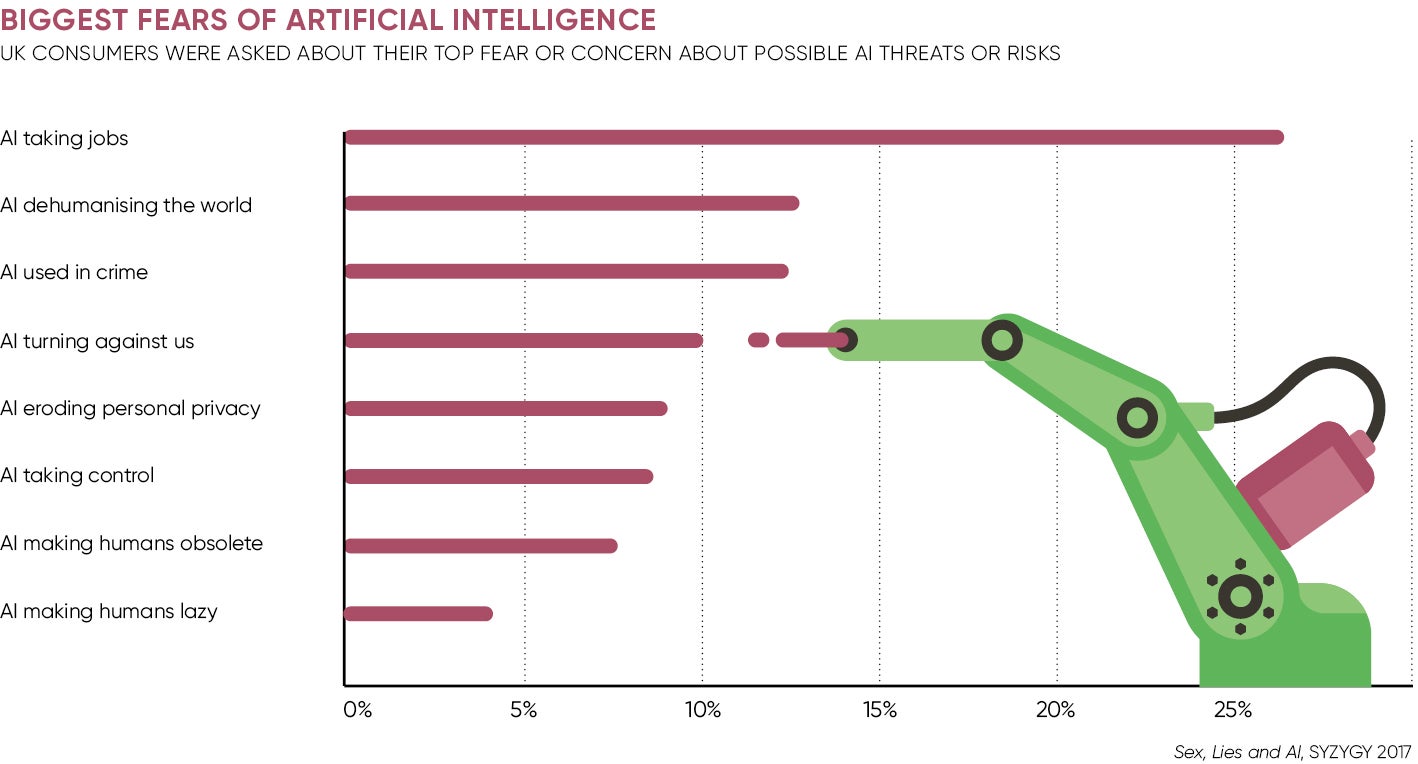

His concerns chime with the findings of a 2017 report, Sex, Lies and AI, by digital agency SYZYGY, which found high levels of anxiety about the undeclared conversational or video user interface. More than 85 per cent of respondents wanted AI to be regulated by a “Blade Runner rule”, making it illegal for chatbots and virtual assistants to conceal their identity. A cause for even greater concern, however, might be chatbots fronting an AI application capable of interpreting emotions.

Nathan Shedroff, an academic at the California College of Arts, warns the conversational user interface can be used to harvest such “affective data”, mining facial expressions or voice intonation for emotional insight. “There are research groups in the US that claim to be able to diagnose mental illness by analysing 45 seconds of video. Who owns that data and what becomes of it has entered the realm of science fiction,” he says.

As executive director of Seed Vault, a not-for-profit fledgling platform launched to authenticate bots and build trust in AI, Professor Shedroff thinks transparency is a starting point. “Science fiction has for millennia anticipated the conversational bot, but what it didn’t foresee were surrounding issues of trust, advertising and privacy,” he says. “We are on the cusp of an era where everything is a bot conversation with a technical service behind it.”

Affective data harvested from employees could be used for nefarious and undercover purposes, says Professor Shedroff, who lists examples of employees inadvertently sharing affective data that collectively creates invaluable insider information and third-party suppliers collecting data they share or sell. GDPR (General Data Protection Regulation) does not cover affective data and companies are not aware or dealing with the threat. “We’re in new territory,” says Professor Shedroff.

While legislators and regulators crank up, businesses such as Wealth Wizards are not putting competitive advantage on hold. The online pension advice provider uses AI and plans to use chatbots, but complies with the Financial Conduct Authority, says chief technology officer Peet Denny. “Basically, anything that is required of a human we apply to our AI tools. It’s not designed for AI, but it’s a start,” he says.

If social media giants deem it necessary to police their algorithms, it matters even more for high-stakes algorithms such as driverless cars or medicine

In the absence of AI regulation and laws, a plausible approach advocated by UK Innovation charity Nesta is to hire employees to police the bots. In recent months tech giant Facebook has started to do exactly that, recruiting thousands of staff. “If social media giants deem it necessary to police their algorithms, it matters even more for high-stakes algorithms such as driverless cars or medicine,” says Nesta’s chief executive Geoff Mulgan.

An industry that has used robots for decades and is now embracing co-bots is perhaps the best role model of how we should treat autonomous systems. Manufacturers are installing more intelligent robots on the factory floor and Ian Joesbury, director at Vendigital, anticipates a mixed workforce in the future. “Skilled technicians will work alongside a co-bot that does the heavy lifting and quality assurance,” he says.

Manufacturers are reviewing human resources practices in a new situation where a human works alongside a robot that never tires, Mr Joesbury adds. But the sector’s policing of current generation robots provides a graphical warning of how we should respect future AI. “Robots can be unpredictable in the way they respond to instructions,” he says. “You often see them caged on factory floors so they can’t hurt the human workers.”