If you want to find out what the public thinks about something the obvious thing to do is commission a poll. It’s cheap, scientific and impartial. The only problem is that the confidence in the polling industry has taken a bit of a knock. Can we believe what they say?

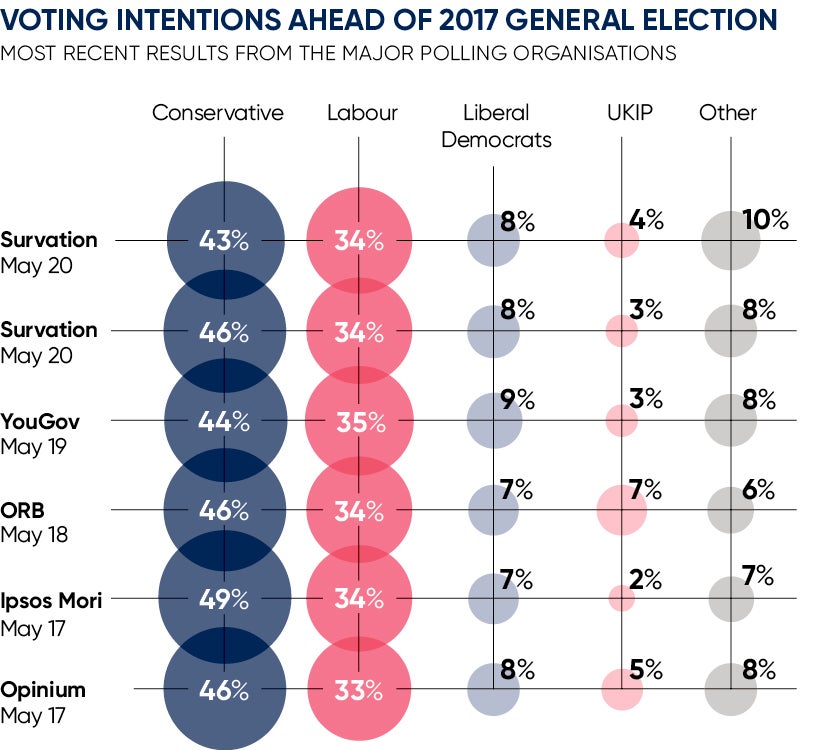

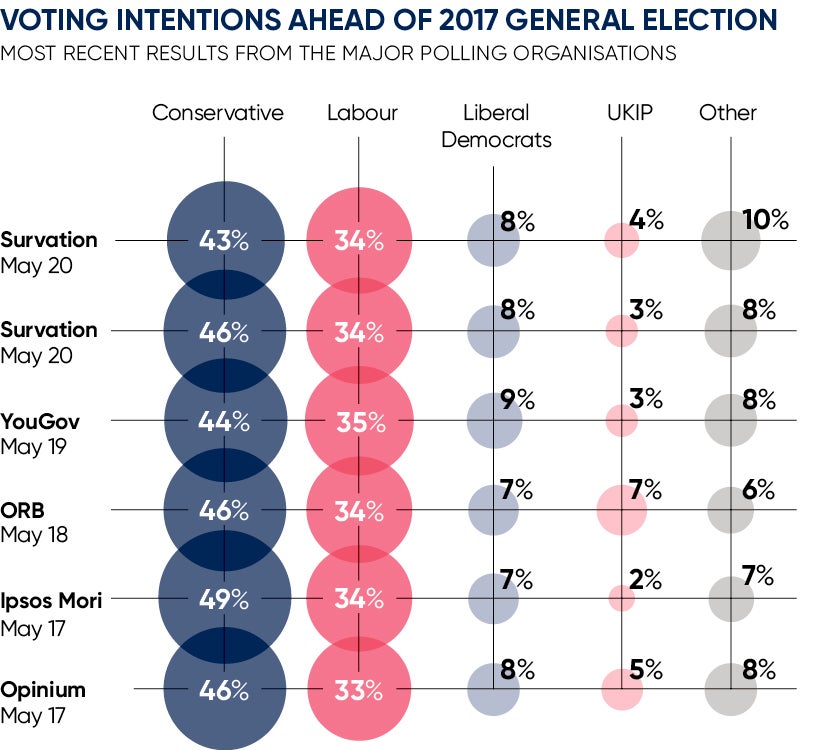

The recent run of wrong calls is dispiriting. The 2015 general election result was a total shock to anyone who trusted the polls. Time after time the pollsters gave Labour a lead or called it neck and neck. No individual poll put the Tories more than a point ahead.

In the end the Conservatives romped home 38 per cent to Labour’s 31 per cent. Ed Miliband was toast and former Liberal Democrat leader Paddy Ashdown was forced to eat his hat, or at least a cake version, as he’d promised on air.

Then Brexit caught punters by surprise. This time the polls were tight, but betting markets rated Leave as 10 to 20 per cent likely. And then Donald Trump won. At one point the celebrated US pollster Nate Silver had Trump with a 2 per cent chance of winning the Republican nomination. Even predictions of the French election were inaccurate. Le Pen lost by far more than was forecast.

So what’s going on?

The main criticism is that pollsters alter their results to match expectations. Opinion shapes the polls, when they should be impartial.

In a review of why political polls have been inaccurate, Mr Silver wrote: “Pollsters also have a lot of choices to make about which turnout model to use.” When results contradict common wisdom, the methodology gets a shake-up. This can include demographic weighting and how to classify undecided voters. Mr Silver added: “An exercise conducted by The New York

Times Upshot blog last year gave pollsters the same raw data from a poll of Florida and found they came to varied conclusions, showing everything from a four-point lead for Clinton to a one-point lead for Trump.”

On this side of the Atlantic, political pundit Dan Hodges took aim at the pollsters for wilful distortion. He said they altered their results to match those of other pollsters to avoid being the odd man out – what he called “herding”. He wrote: “Pollsters deliberately herd. This is not an allegation or conspiracy theory. It’s a statistically proven fact.”

French presidential candidate Marine Le Pen lost this month’s election by far more than forecast

Does this mean polling is fundamentally flawed?

Not if you talk to those who know. Mike Smithson, a former BBC journalist who runs PoliticalBetting.com, says he has a golden rule: the poll which shows Labour doing worst is the right one. He’s relaxed about the so-called errors. “The polls got it pretty spot on in 2005 and not too far off in 2010,” he says. “The election in 2015 was particularly bad, for the reason that they included too many non-voters. This time action has been taken. The bias might be the other way.”

He also points out that the press are hyperactive. A swing within the margin of error triggers a tsunami of excited headlines.

The main criticism is that pollsters alter their results to match expectations

The industry itself is sanguine. Ben Page, chief executive of pollster Ipsos MORI, says political polling is a tiny subset of the industry. “It’s 0.1 per cent of our business,” he says. “Some of the biggest agencies like Nielsen don’t bother with political work. Others, like YouGov, are known by the public, but is the 25th largest.”

He says in most elections the polling is accurate. Elections such as the Dutch election, in which the results were accurate, tend to get overlooked. The key point is that political polling is a pretty unusual beast. “There’s very little crossover from politics to industry,” says Mr Page.

Commercial users of polling make use of a vast array of techniques to refine the results. Qualitative interviews are a great way to scope a new subject. This involves talking freely to around 30 people from the target market. Tracking is a big area, so Ipsos MORI monitors volunteer’s internet and smartphone usage. And ethnographic research takes brands into the daily routines of consumers.

“We have 100 documentary makers,” says Mr Page. “People can’t tell you how they brush their teeth or what’s in their make-up cabinet. You need to go in and find out.” In no way are brands simply relying on a limited set of questions answered by 1,000 random people.

Using politics to analyse the polling industry is always going to be of limited use. In politics, a single percentage swing can be the difference between victory and defeat. And the UK political landscape is multi-dimensional. Wales, for example, has five or six parties trading votes in each constituency.

Politics is a great way to tease out nuances in how polling works, but it’s no guide to the health of the industry.

So what’s going on?

Does this mean polling is fundamentally flawed?