A proactive and dynamic response to digital identity security is now critical. Latest figures from fraud prevention organisation Cifas show there has been a sharp rise in identity fraudsters applying for loans, online retail, telecoms and insurance products.

Simon Dukes, chief executive of Cifas, says: “We have seen identity fraud attempts increase year-on-year, now reaching epidemic levels, with identities being stolen at a rate of almost 500 a day.”

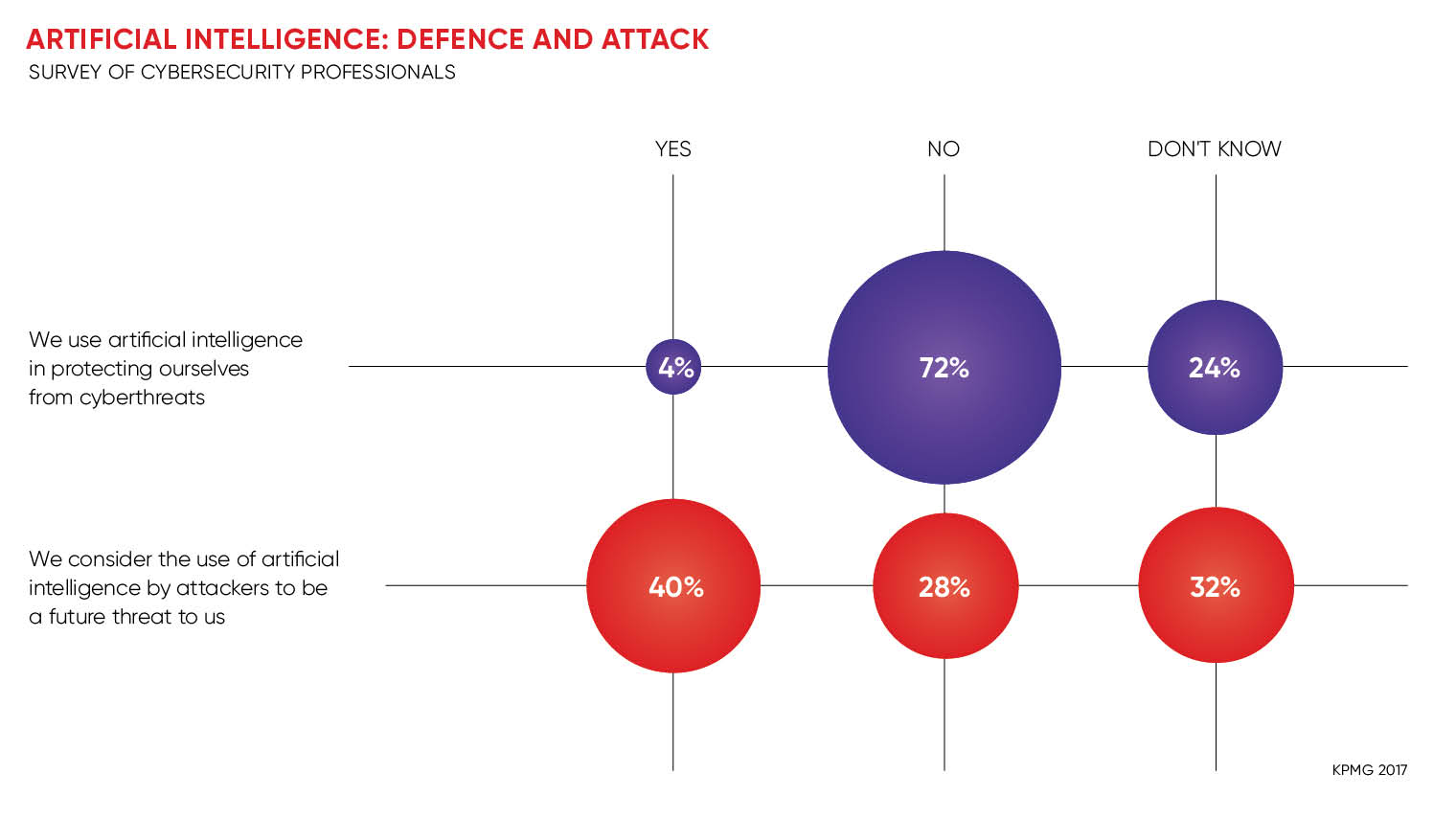

Proving your identity has always been essential, but none more so than across the digital landscape. It’s not surprising that artificial intelligence (AI) and machine-learning are being rapidly developed as an aid to identity authentication.

The risk of chargebacks, botnet attacks or identity theft is leading enterprises to deploy intelligent systems that are not simply looking at publicly available data to identify a person. Earlier this year, for instance, Sift Science announced its Account Takeover Prevention that can detect and block illegitimate login attempts.

We have seen identity fraud attempts increase year-on-year, now reaching epidemic levels

The Cyber Security Breaches Survey 2017 revealed that just under half (46 per cent) of all UK businesses identified at least one cybersecurity breach or attack in the last 12 months. This rises to two thirds among medium-sized firms (66 per cent) and large firms (68 per cent). Protecting the personal data of their customers is now a commercial imperative.

Using traditional data, such as name, address, email, date of birth, IP address and biometrics such as voice, fingerprint and iris scan, are being joined by behavioural characteristics that are unique to the individual. This is necessary as much of the traditional personal data is available via public record or can be purchased on the dark web. However, behaviour isn’t a tangible piece of data that can be purchased, which makes this form of security highly attractive for enterprises and organisations.

The issue has been analysing the masses of data a consumer’s digital footprint could contain. This is the province of AI and machine-learning that can see patterns in the data collected and accurately assign this to an individual as their digital ID. Just checking information on credit agencies, for instance, is no longer robust enough in the face of cybercriminals who can create synthetic personas.

The issue has been analysing the masses of data a consumer’s digital footprint could contain. This is the province of AI and machine-learning that can see patterns in the data collected and accurately assign this to an individual as their digital ID. Just checking information on credit agencies, for instance, is no longer robust enough in the face of cybercriminals who can create synthetic personas.

To combat spoofing attacks, AI and machine-learning are being used widely in a variety of security applications. One of the most recent comes from

Onfido that has developed its Facial Check with Video that prompts users to film themselves performing randomised movements. Using machine-learning, the short video is then checked for similarity against the image of a face extracted from the user’s identity document.

For all enterprises and organisations, the authorisation of payments is vital. Johan Gerber, executive vice president of security and decision products at Mastercard, explains their approach: “Artificial intelligence and machine-learning are crucial security capabilities to interpret the complexity and scale of data available in today’s digitally connected world.”

How you behave online will become a critical component of your identity. However, AI and machine-learning systems will need to be sophisticated enough to understand when someone changes their behaviour, without it being malicious. For instance, when you are on holiday, your digital footprint changes. AIs would need access to your travel arrangements to ensure your credit card isn’t declined because of anomalous behaviour. These systems are coming from a new breed of security startups, including Checkr, Onfido and Trooly, that understand cyberthreat.

It is also becoming clear that those businesses that use more sophisticated security and identity verification systems lessen their instances of cyberattack. The Fraud and Risk Report 2017 from Callcredit illustrates this as only 5 per cent of businesses that have been victims of fraud this year have used any sort of behavioural data for fraud insights. Essentially, businesses that aren’t getting hit by fraudsters are using more sophisticated techniques.

Last year 63 per cent of cyberattacks involved stolen credentials, according to Verizon’s Data Breach Investigations Report. “By monitoring to ensure that all systems and data are behaving normally instead, enterprises can allow people to get on with their work and only intervene when someone is trying to access areas they shouldn’t,” says Piers Wilson, head of product management at Huntsman Security.

The current level of development with AI and machine-learning has already delivered new security systems that are in use today. Mastercard’s Decision Intelligence is a good example. However, AI and machine-learning are far from autonomous and still require high levels of supervision. They can clearly search vast quantities of data to respond to a specific question or task, such as authenticating the identity of a shopper. AIs can identify a change in behaviour and highlight an anomaly, but is this behaviour a threat?

Greg Day, vice president and chief security officer at Palo Alto Networks, concludes: “There is a bigger impact that machine-learning will have on the cybersecurity industry and that has to do with the collection and aggregation of threat intelligence. When cybercriminals ply their trade, they leave behind digital breadcrumbs known as ‘indicators of compromise’.

“When collected and studied by machines, these can provide tremendous insight into the tools, resources and motivations that these modern criminals have. As such, access to rich threat intelligence data and the ability to ‘learn’ from that data will ultimately empower organisations to stay one step ahead of cybercrime.”

As we all tend to fall into habits, including how we access digital services, our purchasing decisions, what devices we typically use, for how long and from which locations, these behaviours can all be used by AIs to build a profile of an individual.

If this behaviour is deviated from, the AI can easily spot this change of pattern within the data that defines who we all are. This “contextual intelligence” is the basis for rapidly developing security systems that could not function without advanced AI and machine-learning.