When Tesla boss and tech billionaire Elon Musk proclaimed that in the future, robots would “be able to do everything better than us… I mean all of us”, he wasn’t going out on a limb. For years, academics and experts had been warning that rapid developments in artificial intelligence (AI) and machine-learning were set to destroy hundreds of millions of jobs around the world, leaving many of us out of work.

Throughout history innovations have come along like electricity and steam, and they do displace jobs. But it is about how we shape those innovations. Any tech can be used for good or for evil, and we want to

But more recently experts have coalesced around a more nuanced point of view: that AI could help us to work faster and smarter, boosting productivity and creating as many – if not more – jobs than it displaces in the coming decades.

AI needs to build on work done by humans

One proponent of this idea is Mind Foundry, an AI startup spun out of University of Oxford’s Machine Learning Research Group in 2015. The firm says its state-of-the-art machine-learning algorithms are already helping clients make better use of their data to gain “deeper insights” about their business. But chief executive Paul Reader readily admits there are certain things that algorithms will never be able to do without a human there to guide them.

“Automation is not the future, human augmentation is,” the former Oracle director tells Raconteur. “Algorithms can’t tell you how you can create value in your business, asking those sorts of questions is an innately human capability. Algorithms rely on humans having done that work first.”

A 2018 study by the accountancy giant PwC forecasted that machines would create as many new jobs in the UK as they destroyed over the next 20 years – although it said there would be “winners and losers” by industry sector, and many roles were likely to change.

Rob McCargow, director of AI at PwC in the UK, says he is cautiously optimistic about the job-creating potential of AI, and says that “augmentation” will play a big part in the future workforce – be it by enhancing the skills we already have or freeing us up to do more interesting or important things.

He accepts that most industries will delegate tasks and roles to machines as time goes, and that will displace jobs. But he says humans will still be needed to oversee these systems to make sure they work properly.

Is AI better suited to making small, less important decisions?

Machine-learning systems can already discern patterns from huge swathes of data much faster that humans can, but they are fallible. Take the internal recruitment engine that Amazon was reportedly developing to enhance its search for top software engineers and other technical posts.

Last year, Reuters reported that the ecommerce giant had scrapped the system after realising that it was not selecting candidates in a gender-neutral way. It turned out Amazon’s computer models were trained to vet applicants by observing patterns in resumes submitted to the firm over a ten-year period – and most came from men.

“However smart these systems become there is an increasing need to check the findings and interpret results,” Mr McGarow says. “Most of us are happy to let AI make decisions for us about trivial things, like movie recommendations, but we are less so when there’s any risk involved.”

He says human oversight of AI apps will be needed in any regulated industry, including banking, healthcare, diagnostics and insurance, because firms will need to ensure decisions have been reached transparently and fairly, and regulators will want to know how decisions have been made.

The rise of the “data ethicist”

AI should also augment the way we work, allowing us to be more productive. Most experts agree that roles involving more repetitive tasks are at a higher risk of automation – think transportation or manufacturing. However, offloading them onto robots could free us up and allow us to focus on other things, says James O’Brien, a research director at IDC.

“Anywhere there are stretched resources, and/or too much information or data to manage, is a great place to start with an AI-led strategy,” he says.

He gives the example of the Babylon Health app, a subscription-based service in the UK that allows you to book video consultations with UK GPs. It features AI-powered chatbots and a symptom checker. “It frees up GPs and augments their stretched resources,” he says.

Mr O’Brien expects that as roles requiring less innately human skills are automated, others that do, such creative roles or those in social care, will expand. We could see totally new sorts of jobs, enabled by AI, emerging too.

One area could be in so-called “social physics”, he says, where humans use machine-learning to crunch masses of structured and unstructured data to gain better insights into social problems or public health issues. Firms are already hiring “data ethicists” too, to look beyond immediate risks posed by AI programs and monitor unintended consequences of the technology.

“We may also need to create roles to help with the increasing convergence of new technologies, as AI is used in combination with 3D printing, the internet of things and augmented and virtual reality,” says Mr McGarow.

AI raising questions about nature of work, and humanity itself

Much further into the future, some have suggested we could even see human workers being physically augmented with AI systems. Facebook’s Mark Zuckerburg, for example, has invested in a company that, among other things, is researching ways to implant computer chips in the human brain, albeit to cure neurological diseases. And Tesla’s Mr Musk has reportedly backed a brain-computer interface venture called Neuralink.

Much further into the future, some have suggested we could even see human workers being physically augmented with AI systems. Facebook’s Mark Zuckerburg, for example, has invested in a company that, among other things, is researching ways to implant computer chips in the human brain, albeit to cure neurological diseases. And Tesla’s Mr Musk has reportedly backed a brain-computer interface venture called Neuralink.

“Over time I think we will probably see a closer merger of biological intelligence and digital intelligence,” he told a conference in Dubai in 2017.

While that sort of augmentation may sound like science fiction, experts do expect to see a tangible economic boost from AI in the near term. The Organisation for Economic Co-operation and Development argues that data-driven innovation will be a “core asset” of 21st-century global growth, driving productivity and efficiency, fostering new industries and products, and creating “significant competitive advantages”.

PwC estimates it could boost global growth by up to $15.7 trillion in 2030 – more than the current output of China and India combined. However, Mr McGarow warns of big obstacles to realising this potential.

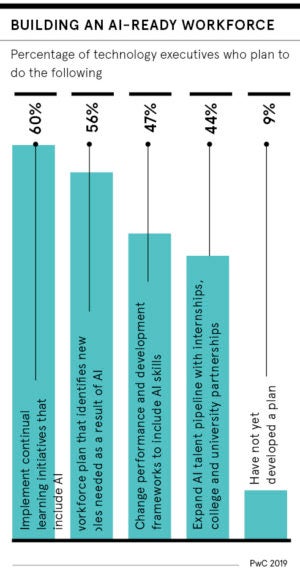

Firstly, he says the global labour force will need to be retrained for the jobs of tomorrow, and that will take time and cost money. Moreover, if people aren’t upskilled, the threat of job displacement will rise.

“You then find yourself in a conversation about the purpose of work – do we always need to work, or should the purpose of AI and machine-learning be to free us up for other things? If there are fewer jobs, will there be a social safety net or universal basic income?”

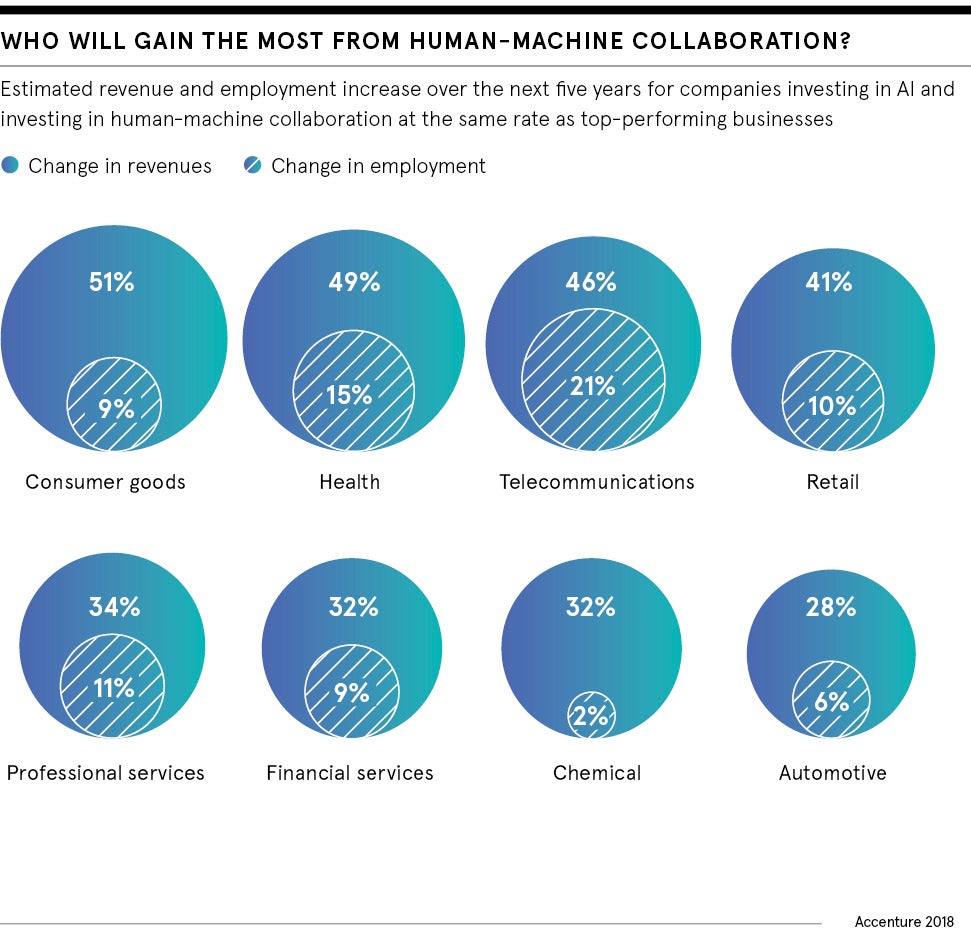

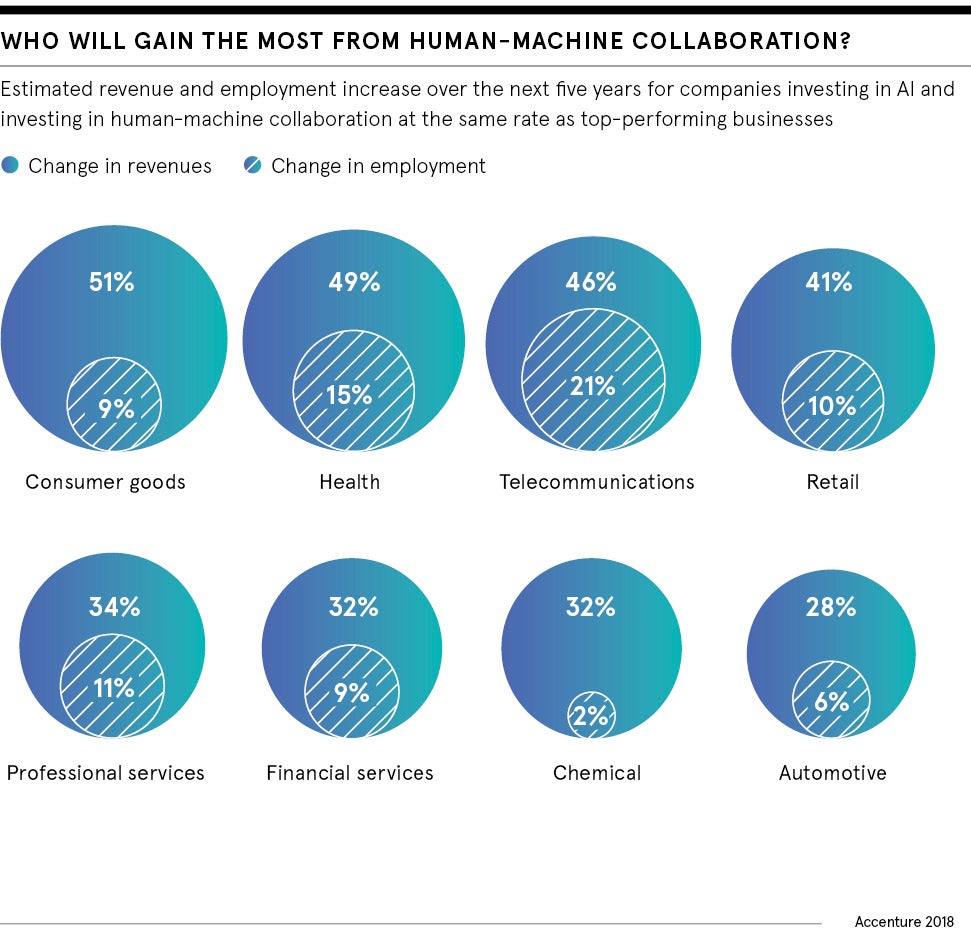

Who will be the AI winners and losers?

He adds that while some countries, such as the United States and China, will benefit from the AI revolution, developing regions like Africa could miss out. That is because the intellectual property rights of any new innovations will remain in the country that develops them, along with most of the economic benefits that accrue from it.

Mr Reader is more optimistic, though, and believes humans have always adapted to disruptive new innovations and will continue to do so. When it comes to retraining the workforce, he says, it will be an “incremental” rather than massive step change.

As for certain regions and professions missing out, he accepts there are likely to be winners and losers. But he points out data scientists are now being hired in almost every function of big companies – from the finance department to the mail room. Other wrinkles will likely iron themselves out in time, too.

“Throughout history innovations have come along like electricity and steam, and they do displace jobs. But it is about how we shape those innovations. Any tech can be used for good or for evil, and we want to use it for good.”

AI needs to build on work done by humans

Is AI better suited to making small, less important decisions?